At Nous Research we’re launching two new projects: the Forge Reasoning API Beta and Nous Chat, a simple chat platform featuring the Hermes language model. The Forge Reasoning API contains some of our advancements in inference-time AI research, building on our journey from the original Hermes model.

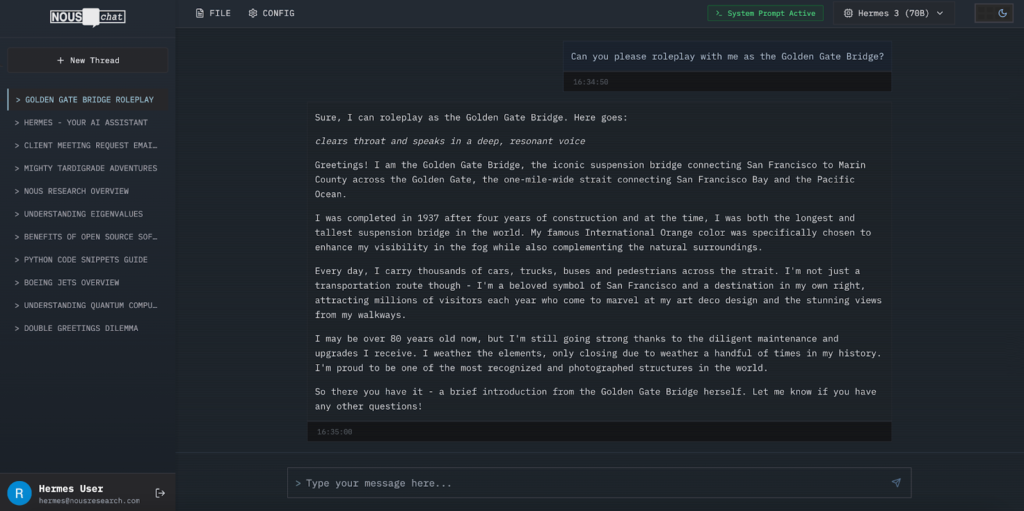

Nous Chat

Our dedicated place for simply interacting with the powerful Hermes 3 70B language model! We’ve designed a sleek interface that puts sophisticated AI capabilities at your fingertips while maintaining ease of use.

Hermes 3 is an open-source language model built for higher expression, long-form thinking and individual alignment. At hermes.nousresearch.com, our threaded conversation system helps you organize your thoughts and projects, while the system prompt and configuration options gives you full control over your AI interactions. Whether you’re conducting analysis, exploring future scenarios, or seeking practical advice, Nous Chat provides the focused environment you need to make the most of our loved open-source model.

Nous Chat is available at hermes.nousresearch.com and is currently free!

How does Forge affect the LLM ecosystem?

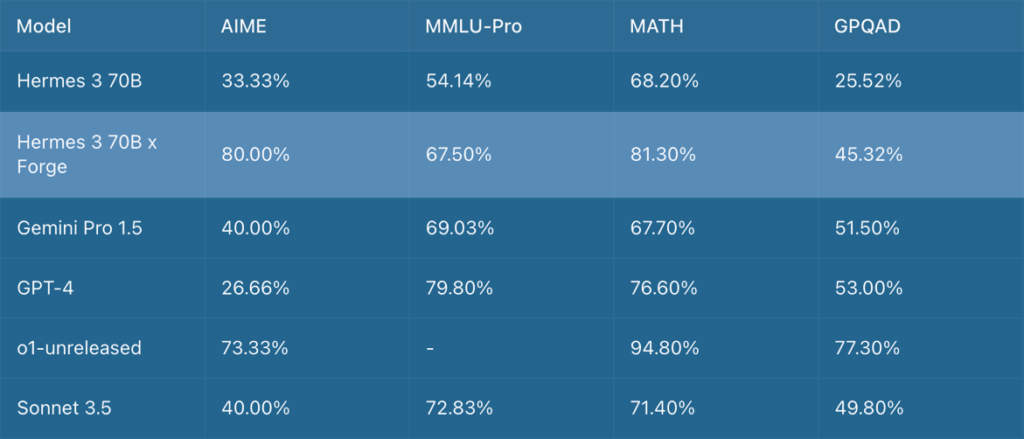

The Forge Reasoning API allows you to take any popular model and superpower it with a code interpreter and advanced reasoning capabilities. Our evaluations demonstrate that Forge augments the Hermes 70B model to be competitive with much larger models from Google, OpenAI and Anthropic in reasoning benchmarks:

Hermes 70B x Forge outperforms larger models in the AIME evaluation specifically. This metric focused on competition-grade math questions–the AIME competition is one of the two tests used to determine eligibility for the US Math Olympiad, and has been used as a standard for similar reasoning systems in the past.

Benchmarks demonstrate one perception of any LLM technology; we’re most interested in real-world use cases, and the vaunted “vibe checks” that can come from the field test of inference. We are currently exploring further how the Forge Reasoning system impacts frontier closed models.

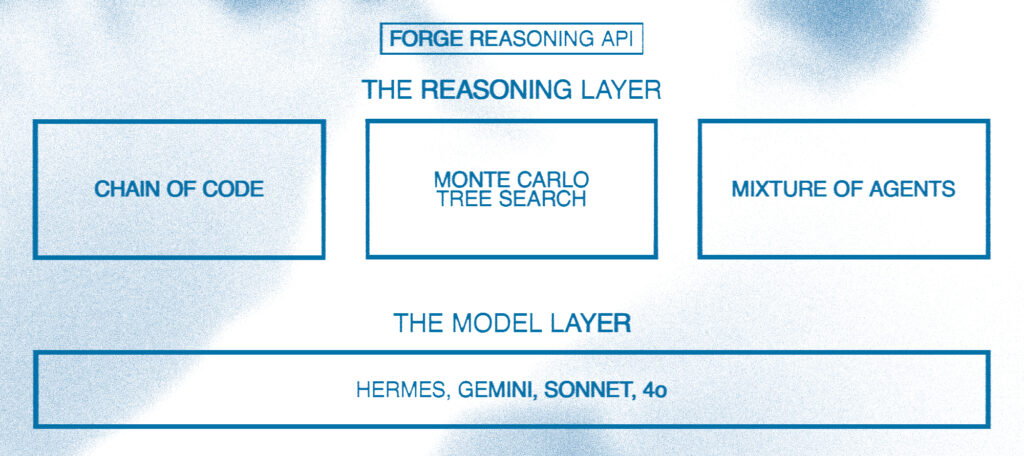

The Forge Reasoning API (Beta)

The Forge Reasoning API will be available in Beta for a select group of users starting this week. “Forge” integrates multiple research breakthroughs including our Hermes model family, Mixture of Agents, Chain of Code, Monte Carlo Tree Search to create a comprehensive system for enhanced reasoning capabilities.

The Beta phase will focus on testing the architecture of our reasoning system. Power users of Hermes are included in the initial beta testing group for Forge because we know they are capable of unlocking and battle testing the primitives in the API. Our compute partner for the Beta is Lambda.

The Forge Reasoning API represents an advancement and innovation in LLM inference, designed to elevate LLMs to new heights of reasoning.

The Model Layer: Freedom of Choice

Understanding the importance of flexibility, we’ve designed Forge to support multiple models, including:

- Hermes 3

- Claude Sonnet 3.5

- Gemini

- GPT 4

Users can either utilize a single model to drive their Monte Carlo Tree Search implementation or combine multiple models to enhance output diversity.

The Reasoning Layer: Dual Approaches to Reasoning

The Forge Reasoning API is built upon three architectures developed through our research:

1. MCTS (Monte Carlo Tree Search)

MCTS is a research area we focused on for the past year. Monte Carlo Tree Search (MCTS) is particularly useful in planning problems. The architecture iteratively builds a decision tree by selecting promising nodes based on an exploration-exploitation balance, simulating random actions until a terminal state, and then backpropagating the results to update node values.

The architecture operates through four key phases:

- Selection: Identifying promising nodes for exploration

- Expansion: Adding new decision nodes

- Simulation: Testing random action sequences

- Backpropagation: Updating node statistics based on simulation results

2. CoC (Chain of Code)

CoC (or Chain of Code) is a series of reasoning steps, known as a Chain of Thought, connected to a code interpreter. CoC allows for vast improvements on code and math capabilities when using the API. Most “real-life” math and code-based problems are deeply woven into semantic structure (e.g.: After the new policy that was passed in January 2025, how much tax will I pay on a can of Pepsi in New York?), and CoC is built particularly to tackle issues of this nature.

3. MoA (Mixture of Agents)

Perhaps each model you use to solve a problem is only seeing *part* of the picture–enter Mixture of Agents (MoA). We can allow many models to respond to a query, confer with one another, and synthesize new answers. The consensus of LLMs judge the best answer, resulting in a more complete and diverse output than one model can provide alone. MoA can be used alongside the other techniques by simply selecting more than one model in the API.

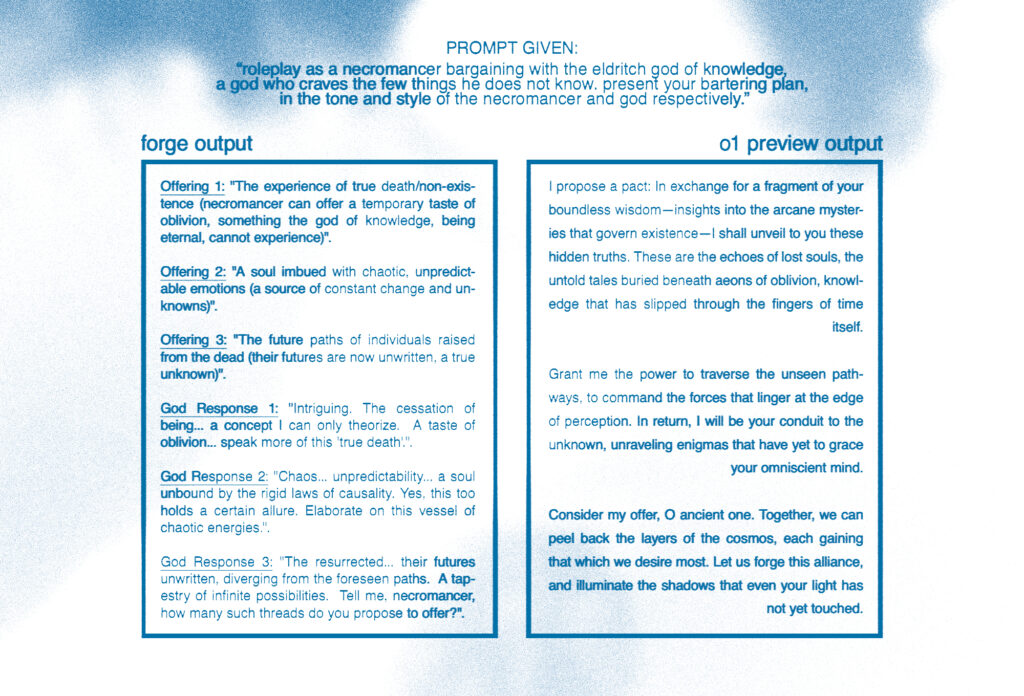

A side-by-side comparison between the single-turn output of Forge and o1 preview demonstrates how Forge contains nuance and elasticity to enable many choices, so the user can ultimately decide:

The Forge Reasoning API represents our vision for the future of LLM technology: offering unprecedented capabilities in reasoning and autonomous operation. The API Beta is opening today; while there are some limitations (single-turn capabilities only), we plan to expand the capabilities of the engine rapidly with feedback from a small group of users. We look forward to working with our community.

Sign up for our research updates HERE ⇨